ChatGPT Hallucination: Why It Makes Things Up & How to Fix

ChatGPT hallucinates because it doesn't know your company. Learn why it makes things up and how to ground it in your docs to get accurate answers. Free guide.

Insights on knowledge management, AI, and productivity

ChatGPT hallucinates because it doesn't know your company. Learn why it makes things up and how to ground it in your docs to get accurate answers. Free guide.

Understand AI memory layers and how they work. Compare Claude's native memory, ChatGPT, and team-friendly alternatives like Context Link. Step-by-step guide.

Learn what AI hallucinations are, why they happen, and how grounding AI in your data prevents false output. Step-by-step guide with real examples.

Learn the key differences between context engineering and prompt engineering. Understand when to use each, why context engineering scales better, and how to implement it for your team.

Learn how to build an AI knowledge base from your existing Notion, Google Docs, and website content. No migration, no coding. Works across ChatGPT, Claude, and more.

AI doesn't give you a voice. If you lack opinions, examples, and receipts, the model will average you out. Established companies already have that pile.

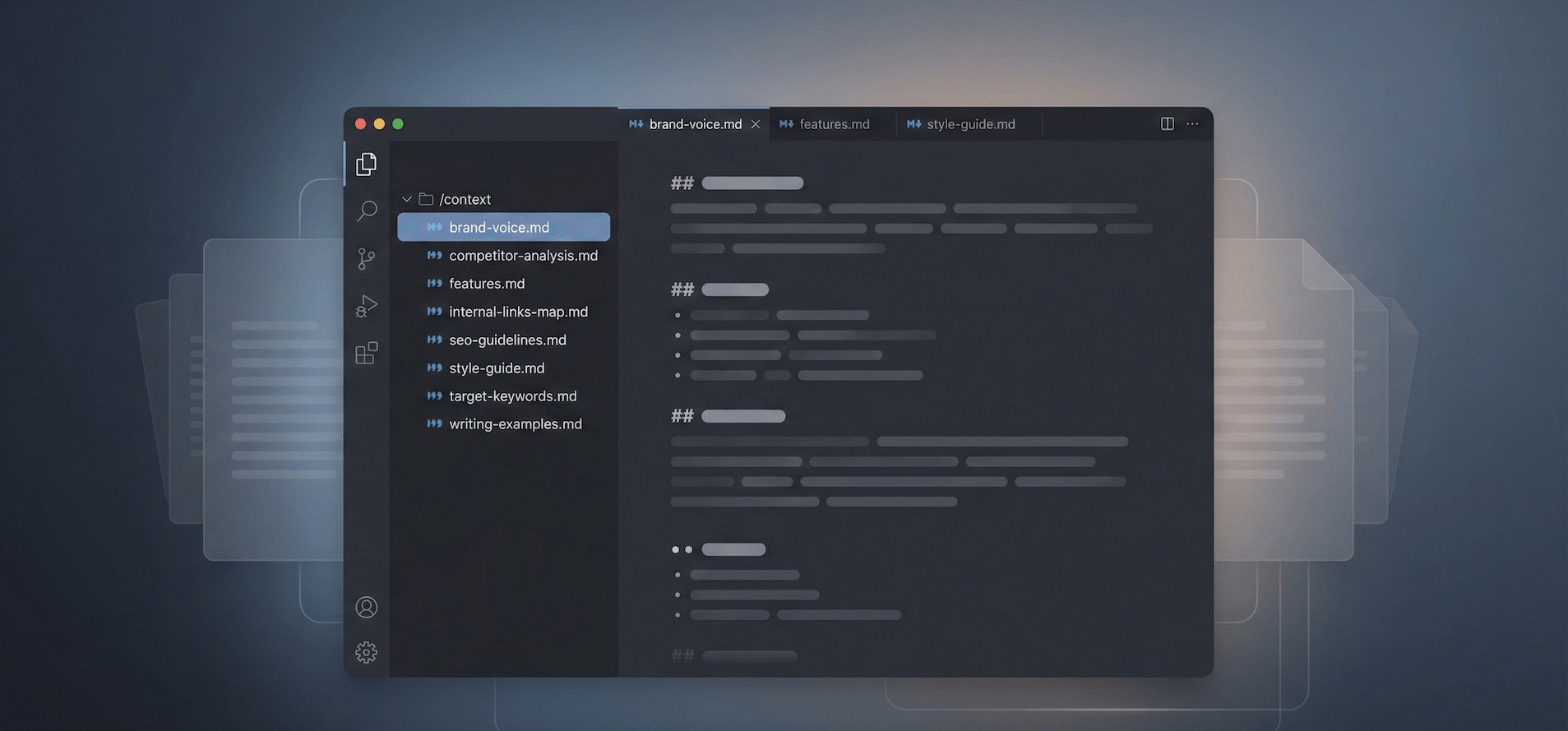

Context engineering means designing what AI knows before it answers. Learn 4 levels - from copy-paste to automated context links - and get better AI answers.

Turn your Google Drive docs into an AI agent. Compare 4 methods -- from Google Workspace Studio to model-agnostic context links. Find the right fit.

Turn your Notion workspace into an AI agent. Compare 4 methods -- from Notion's built-in agent to model-agnostic context links. Find the right fit.

Turn your website content into a working AI agent. Compare 4 methods -- from chatbot widgets to model-agnostic context links. Find the right fit for your team.